AI and Bias Removal a Herculean Task

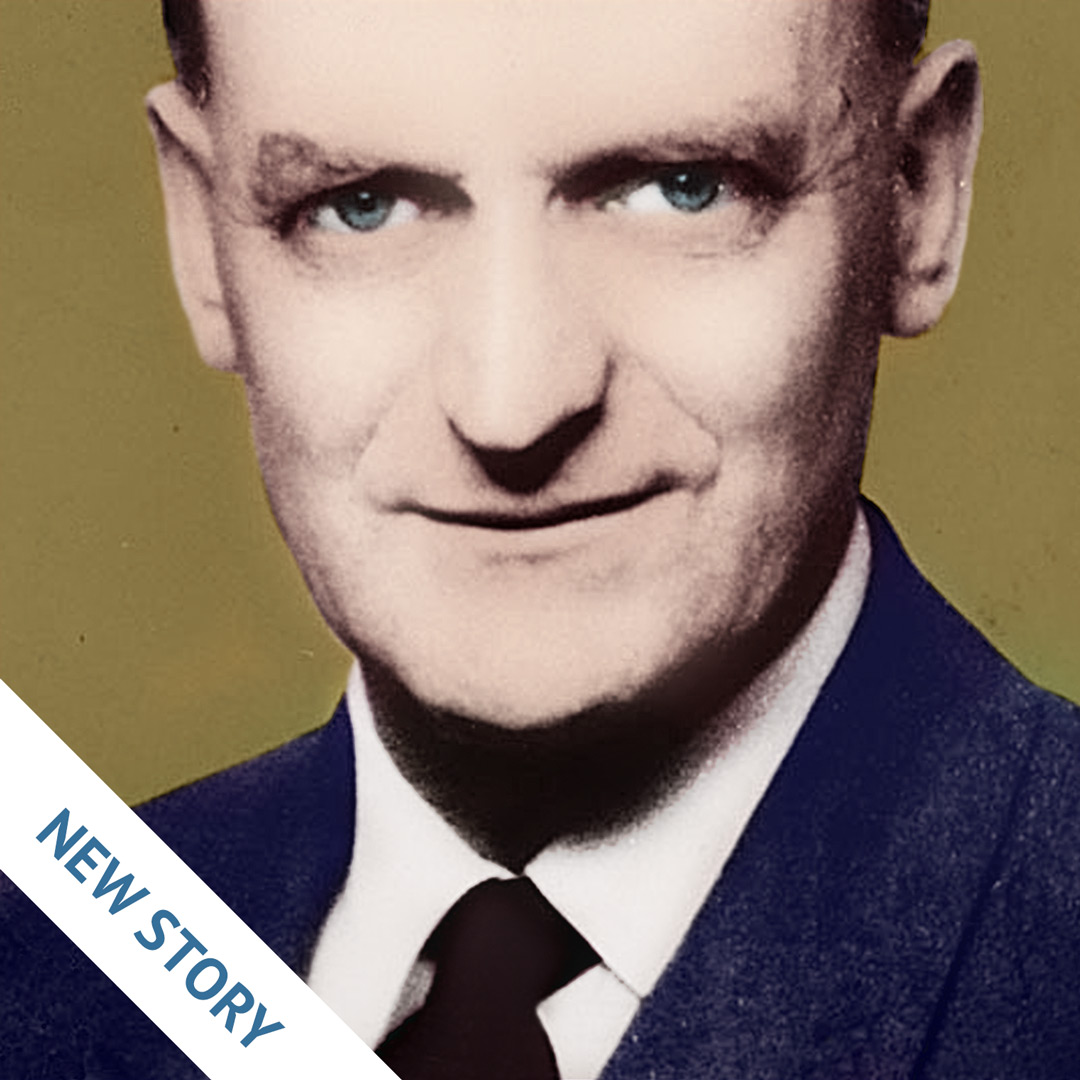

“Google’s algorithm for detecting hate speech tended to punish tweets written by African Americans, classifying 46 per cent of non-offensive messages as hate language, even if they were not, according to a report by MIT Technology Review. How did it happen?” Raul Rodriguez investigates for Indian IT magazine Dataquest, and looks to New Zealand computer scientist Professor Ian Witten from the University of Waikato for some answers.

“According to Witten, most of the routines that work in solving mysteries and finding surprises about big data are organized around two objectives: the classification tasks and the regression tasks,” Rodriguez writes.

“In the first case, that of classification tasks, against a certain event or case in the world, the system must assign a category. For example, if a person in a photo is a man or a woman, or if a certain sound corresponds to that produced by an electric guitar or a bass guitar, or if a message on Twitter is positive or negative based on polarity, which would be sentiment analysis.

“The correct classification of an event or case allows many subsequent operations, such as extracting statistics, detecting trends or operating with filters: for example, to determine which hate messages should be removed from social networks.

“But nothing is so simple. In order for the classification routines to operate, it is necessary to have a collection of previously collected cases, i.e. a dataset. If what you want is to classify the sex of certain faces, you must have images of faces, already manually labelled supervised learning that feed the algorithms to find the formulas to classify. The amount of data required is so much that these databases have started to be called big data.”

Original article by Raul Rodriguez, Dataquest, April 14, 2020.